Abstract

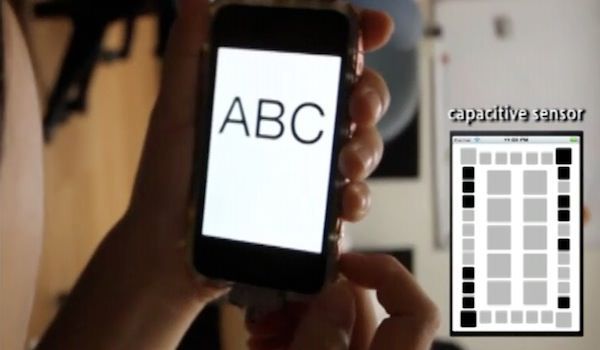

Automatic screen rotation improves viewing experience and usability of mobile devices, but current gravity-based approaches do not support postures such as lying on one side, and manual rotation switches require explicit user input. iRotateGrasp automatically rotates screens of mobile devices to match users’ viewing orientations based on how users are grasping the devices. Our insight is that users’ grasps are consistent for each orientation, but significantly differ between different orientations. Our prototype used a total of 44 capacitive sensors along the four sides and the back of an iPod Touch, and uses support vector machine (SVM) to recognize grasps at 25Hz. We collected 6-users’ usage under 108 different combinations of posture, orienta-tion, touchscreen operation, and left/right/both hands. Our offline analysis showed that our grasp-based approach is promising, with 80.9% accuracy when training and testing on different users, and up to 96.7% if users are willing to train the system. Our user study (N=16) showed that iRo-tateGrasp had an accuracy of 78.8% and was 31.3% more accurate than gravity-based rotation.